Our Work

Our work focuses on the practical engineering of large foundation models optimized for high-level reasoning capabilities.

On top of these models, we prototype AI agents that can accomplish goals on our behalf, starting with agents that help us code. We believe seriously using these agents to accelerate our own work is necessary, in order to shed light on how to improve both the underlying capabilities of our reasoning models, and on interaction design for agents.

Ultimately, we hope to release systems that enable anyone to build robust, custom AI agents that can accomplish larger goals and safely work for us in the real world.

Highlights

Imbue raises $200M to build AI systems that can reason and code

We’re excited to announce our latest funding round, a $200M Series B at a valuation of over $1 billion, with participation from Astera…

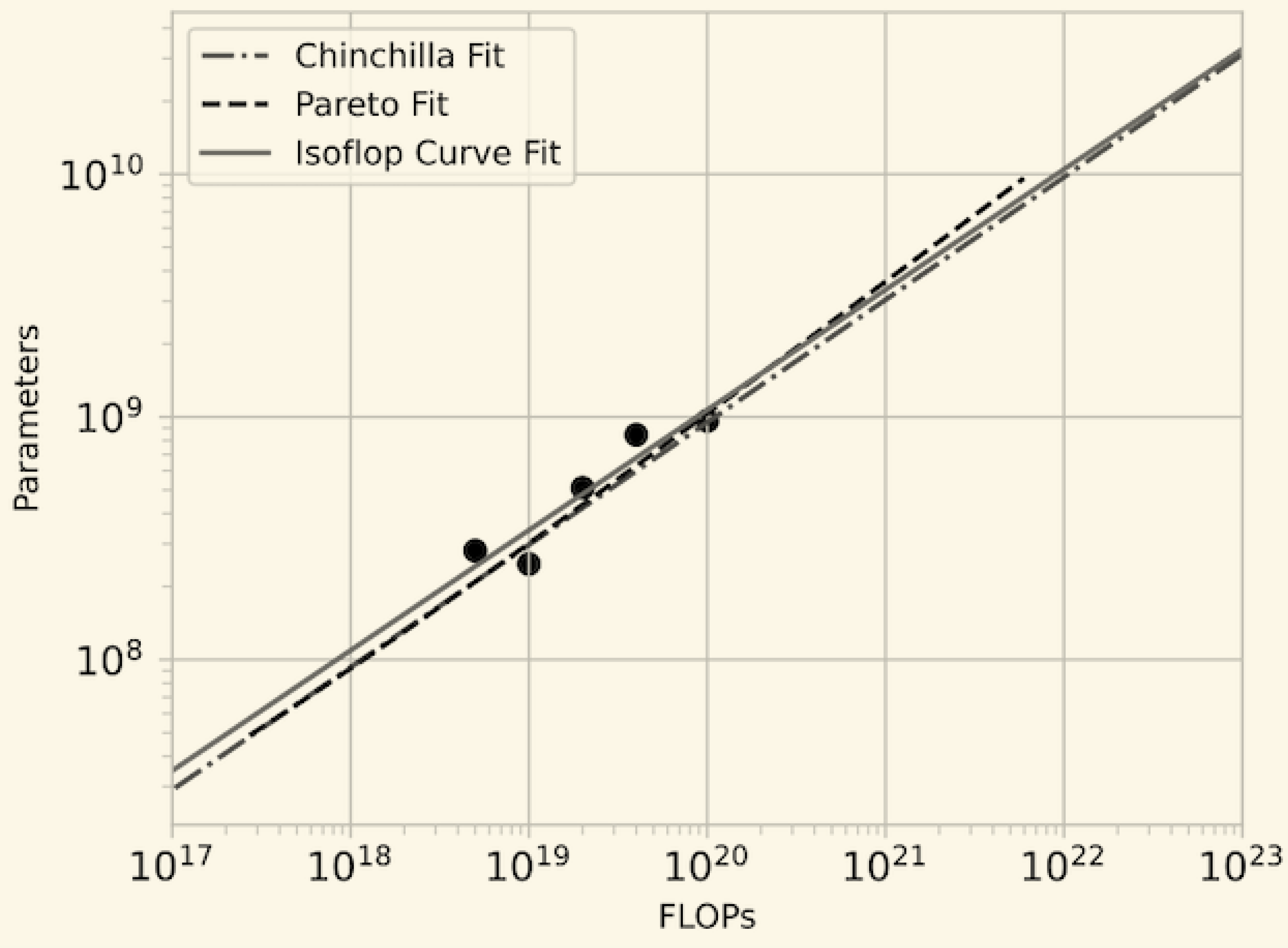

Read moreScaling Laws For Every Hyperparameter Via Cost-Aware HPO

In this post, we introduce CARBS, a cost-aware hyperparameter optimizer that: Automatically reproduces the Chinchilla scaling law for…

Read more

Our Approach to Agents

We take a full-stack approach towards effective agents

To build reasoning models that provide a robust foundation for AI agents, we work on:

- Models: We pretrain very large (>100B parameter) models, optimized for internal reasoning benchmarks.

- Agents: On top of our models, we prototype agents that we use internally within serious contexts. Many of our agents today write code, because that's the primary work we do every day.

- Interfaces: Today's AI chat interfaces are skeuomorphic. We think interface invention can solve many core problems around agent robustness, trust, and collaboration.

- Tools: We invest heavily in building tools to speed up our iteration loop—from agent debugging interfaces to hyperparameter optimizers like CARBS.

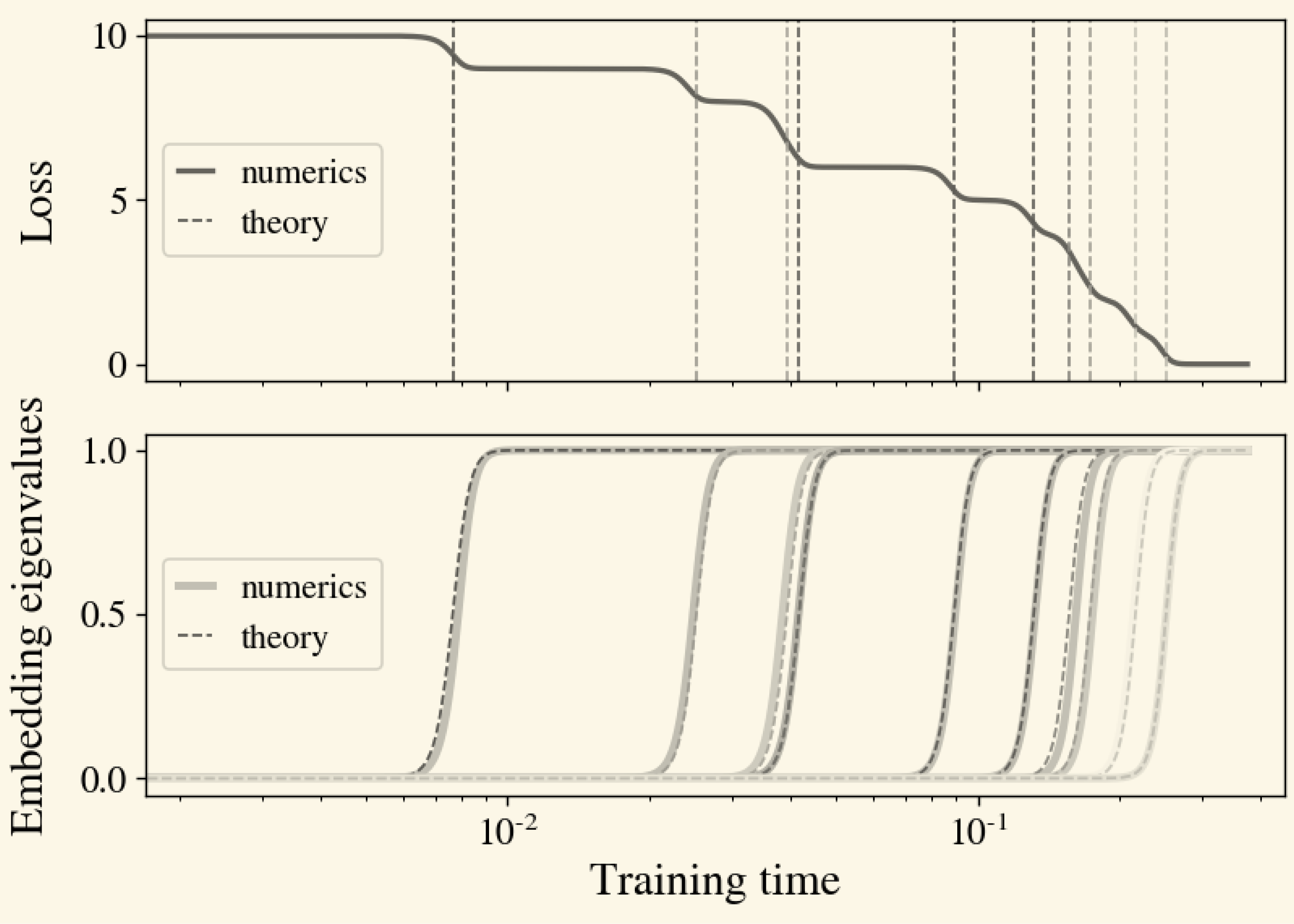

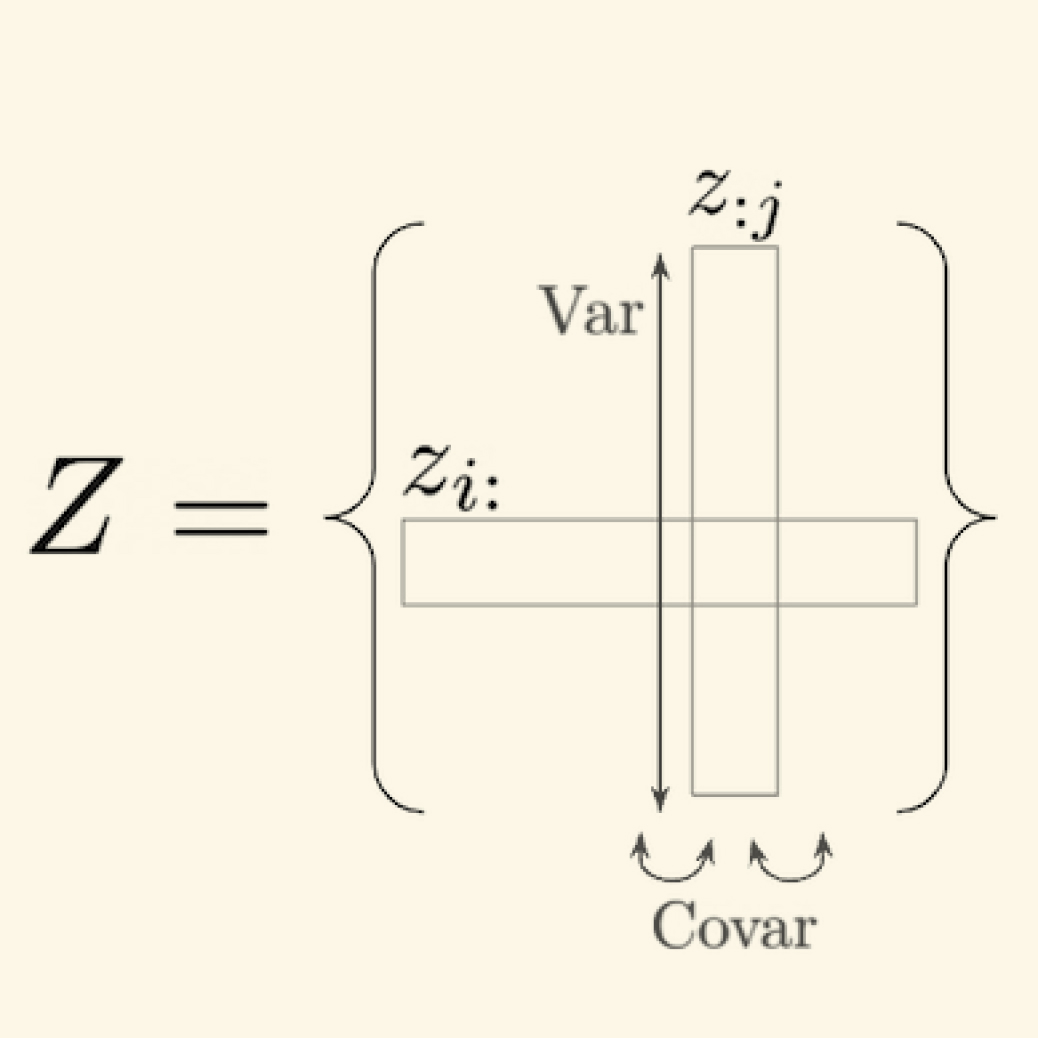

- Theory: We pursue fundamental laws behind deep learning in order to create a robust foundation for agents.

Critically, our full-stack approach unlocks feedback loops designed to speed up our work. Designing agents and tools helps us build better models, in turn unlocking even more useful agents that enable even better models.