We are Generally Intelligent, a new, independent research company whose goal is to develop generally capable AI agents with human-like intelligence in order to solve problems in the real world.

We believe that generally intelligent computers could someday unlock extraordinary potential for human creativity and insight. However, today’s AI models are missing several key elements of human intelligence, which inhibits the development of general-purpose AI systems that can be deployed safely. For example, today’s systems struggle to learn from others, extrapolate safely, or learn continuously from small amounts of data.

Our work aims to understand the fundamentals of human intelligence in order to engineer safe AI systems that can learn and understand the way humans do. We believe such systems could empower humans across a wide range of fields, including scientific discovery, materials design, personal assistants and tutors, and many other applications we can’t yet fathom.

We’re backed by long-term investors who understand that our project does not include medium-term commercialization milestones. These backers have contributed over $20 million and committed more than $100 million in future funding in a combination of options and technical milestones. They include Jed McCaleb (founder of the Astera Institute), Tom Brown (lead author of GPT-3), Jonas Schneider (former robotics lead at OpenAI), and Drew Houston (CEO of Dropbox). Our advisory board includes Tim Hanson (cofounder of Neuralink), and Celeste Kidd (Professor of Psychology at UC Berkeley).

We’re primarily a research organization. While our ultimate aim is to deploy human-level AI systems that can generalize to a wide range of economically useful tasks, our current focus is on researching and engineering these systems’ core capabilities, and on developing appropriate frameworks for their governance.

Our approach to developing intelligent agents

Given that intelligence is often defined as the “ability to achieve goals in a wide range of environments,” our approach is to construct an array of tasks for general agents to solve, layering on more complex tasks as the capabilities of the agents we develop grows. To do that, we conduct research into the theoretical foundations of deep learning, optimization, and reinforcement learning, which allows us to more effectively create such agents.

We leverage large-scale compute to train our agents, though in a slightly different way from other organizations. We focus on training many different agent architectures so that we can explore the entire space of possibilities and better understand each component.

We believe that this fundamental understanding is essential for engineering safe and robust systems. In the same way that it is difficult to create safe bridges or chemical processes without deducing the underlying theory and components, we think it will be difficult to make safe and capable AI systems without such understanding.

Open-sourcing Avalon, a reinforcement learning research environment

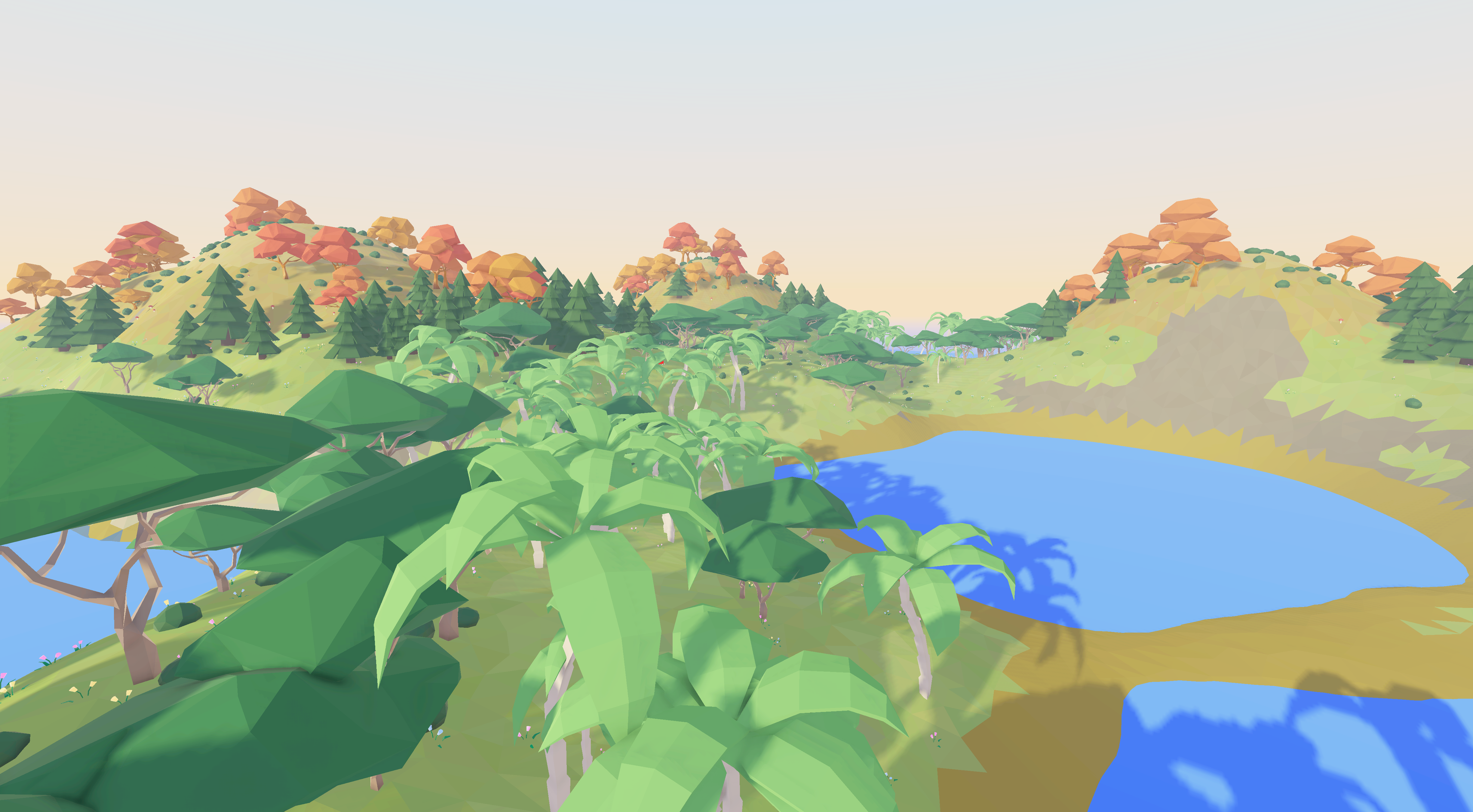

One example of what our approach looks like in practice is Avalon, a reinforcement learning simulator, environment and benchmark that we will be presenting at NeurIPS 2022, and which we are open-sourcing today.

Avalon is the first reinforcement learning benchmark of its kind that allows agents to learn from complex, procedurally-generated 3D worlds. Within them, a single agent must learn to solve the types of problems that, in an evolutionary sense, led to the emergence of our species’ unique cognitive abilities.

The current version of Avalon defines a wide range of simple tasks, such as climb, jump, and throw, that are foundational aspects of intelligence for animal species. As our agents make progress, we add progressively harder tasks that correspond to cognitive developmental milestones for human intelligence. For example, we’re currently exploring tasks that correspond to linguistic instruction and within-lifetime learning—although these tasks aren’t yet part of the Avalon benchmark we’ve currently open-sourced.

Avalon is not only one of the fastest simulators of its kind, but also one of the most accessible. Built on top of the open source Godot game engine, Avalon comes with a rich editor and vibrant community to help other researchers customize and create the worlds that will help them best answer their own research questions.

We’ve included a library of baselines, and our benchmark includes scoring metrics evaluated against hundreds of hours of human performance. Our project page contains a comprehensive set of tutorials, and gives more details on how to get started—it’s as easy as downloading a single file!

We’ve received positive feedback about Avalon from NeurIPS reviewers to date, but we’d love to hear from researchers if they have comments and questions. We hope it proves a useful tool that allows researchers to more easily ask questions about generalization, robustness, and other critical aspects of intelligence that are missing from today’s models.

Of course, Avalon is only a portion of the work we do at Generally Intelligent. We have other work currently under submission and development on AI safety, theoretical approaches to self-supervised learning, optimization, and other topics that are core to our development of general-purpose agents that could someday be safely deployed in the real world.

What’s next

We’ll be presenting Avalon at NeurIPS 2022 in New Orleans. We’re a sponsor there, so you can come to either the poster, presentation, or booth to say hello and learn more about our approach. We’ll be sharing more of our work on our blog in the coming months, and continuing to highlight researchers on our podcast who’ve published results we find insightful.

If you’re a researcher interested in collaborating with our team, please feel free to reach out. We’re always happy to share our ideas and help in the ways we can.

We’re also looking to hire researchers and engineers from a highly diverse range of backgrounds—with or without experience in machine learning or a PhD—who are driven by a desire to uncover fundamental truths about the nature of intelligence, and to tackle the hard problem of engineering those priors into AI systems. We take an unusually interdisciplinary approach that blends software engineering, physics, developmental psychology, machine learning, neuroscience and other fields.

If you want to work on a principled approach to building safe general intelligence-a problem that could significantly impact the trajectory of human progress—we’d love to hear from you!